Now it seems that artificial intelligence has almost always been with us. However, AI became a functional tool only a couple of years ago, and it is still not entirely clear what this technology will lead to — will it help us, or is it time to look for John Connor? Okay, okay, let’s not get into that, but what’s important here is this: YouTube has introduced strict rules for labeling content created using artificial intelligence. Therefore, if you work with a virtual assistant and create images and videos with it, then it will be useful for you to learn how to properly label such materials so that the platform does not block your videos. Let’s go!

What content should be labeled?

YouTube actually has a fairly democratic attitude towards generated content, but only given that it does not mislead the viewer. And as you well know, the platform does not like bad boys and girls who break the rules over and over again. Therefore, it genuinely warns that if the creator repeatedly neglects the labels about AI, their videos may be deleted or the channel may be completely disconnected from the Partner Program. And we don’t want this under any circumstances.

So creators are required to notify about content that:

This may include content that has been modified or generated in whole or in part using audio, video, or image creation or editing tools.

Table of Contents

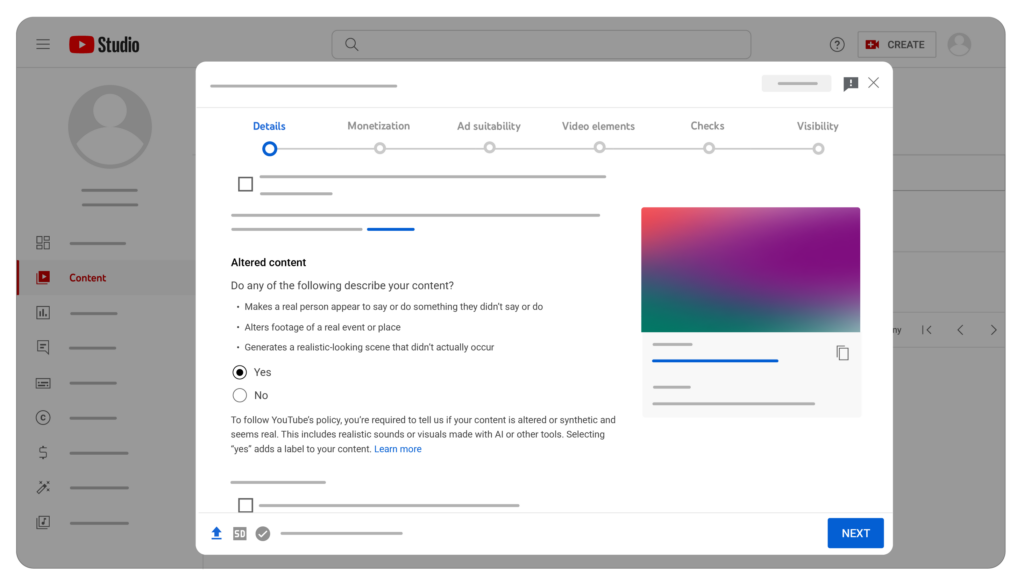

For now, this can only be done when uploading videos from a computer, but YouTube promises that this feature will soon be available on other devices too. So, if your video contains generated content that needs to be reported:

Well, that’s all, no complications and no future problems with the platform.

If you create Shorts videos using YouTube’s AI tools, such as Dream Track and Dream Screen, then you don’t need to report altered content; a notification about the use of AI will be added automatically.

In addition, the platform itself will check the “Yes” checkbox in the “Altered Content” section if the title of your video indicates that it uses generated content.

Let’s go over the basics one more time so you understand how everything works. If you still have questions, you can always clarify certain points in Google Help.

Here are some examples of content, alterations, and video creation and editing tools that do not need to be reported:

Unrealistic content

Minor alterations

Keep in mind that this is not a complete list, just basic examples.

Conclusion

Well, as you can see, labeling AI-generated content on YouTube is easy and simple, and this will allow you to avoid both problems with the platform and misunderstandings with the audience. Good luck making new videos!

Comments